DCP offers cloud-based dedicated servers that provide the robust power needed for today’s demanding data analytics and machine learning applications, with support from experts.

GPUs accelerate the training of complex machine learning models, enabling faster development of Artificial Intelligence (AI) applications like natural language processing, image recognition, predictive analytics and in Large Language Modelling.

Companies dealing with complex and very large volumes of data use GPUs to speed up data processing tasks such as ETL (Extract, Transform, Load), real-time analytics, and complex data queries that can be a problem for traditional CPU-based computing.

In the field of cybersecurity, GPUs help in the real-time analysis of network traffic, intrusion detection, and cryptographic operations. They speed up the processing of large datasets to identify potential threats and vulnerabilities more quickly than traditional CPUs.

GPUs enable the real-time processing of vast amounts of sensor data, crucial for the development and operation of autonomous vehicles and advanced robotics, facilitating tasks like object recognition, navigation, and decision-making.

In healthcare, GPUs are employed for tasks such as high-definition image processing in medical imaging applications, genomic sequencing, drug discovery and modelling, as well as trial data analysis providing faster and more accurate results.

The media and entertainment industry leverages GPU power for rendering high-quality 3D graphics and animations in films, video games, and virtual reality (VR) environments, achieving stunning visual effects, realism, and immersive experiences.

GPUs are used to run complex climate models and simulations, which require immense computational power to predict weather patterns, track climate change, and understand environmental impacts with high accuracy,.

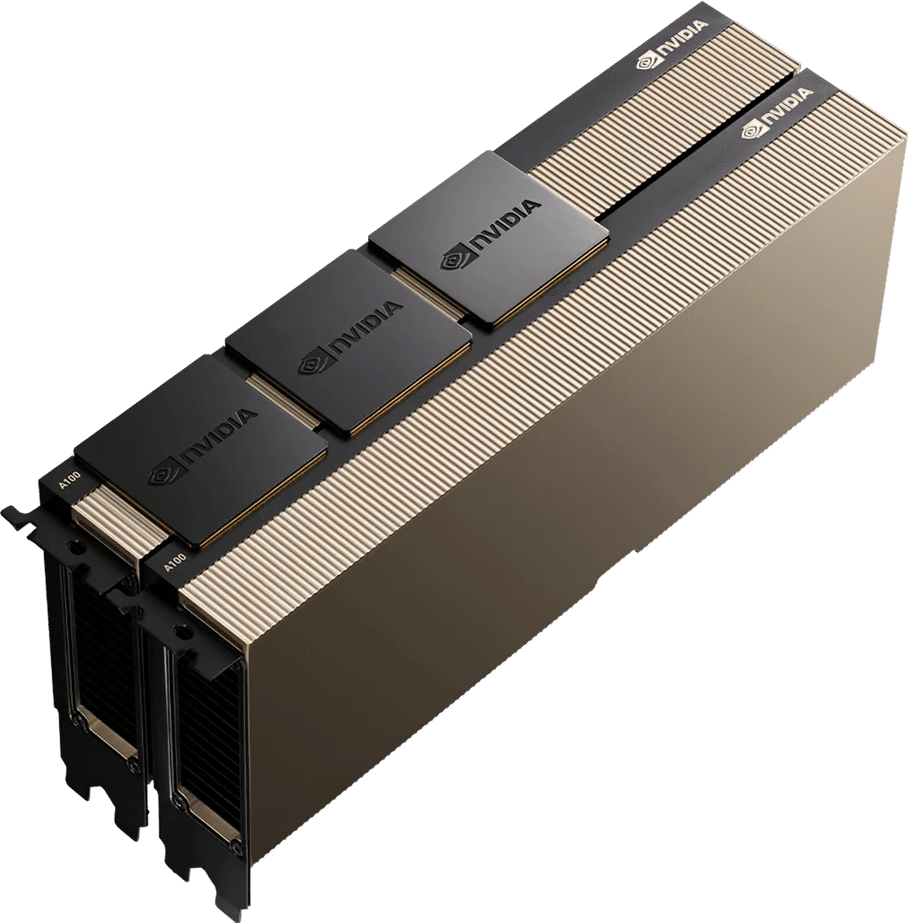

Our high-performance GPU servers use the latest NVIDIA GPUs.

Both Pre-built and customisable servers to suit your applications, using NVIDIA GPUs.

This is an emerging and rapidly developing area of computing and we’re here to help you on every stage of your journey. Here are some answers to common questions about GPU servers:

GPU-based High-Performance Computing (HPC) refers to the use of Graphics Processing Units (GPUs) alongside traditional Central Processing Units (CPUs) to accelerate scientific, engineering, and data-intensive applications. GPUs excel in parallel computing tasks due to their architecture, which enables them to handle thousands of computations simultaneously. In GPU-based HPC, complex calculations and simulations that would traditionally take a long time to process on CPUs can be performed much faster, leading to significant improvements in research, modeling, simulations, and data analysis across various fields such as physics, biology, finance, and climate science. This approach leverages the massive computational power of GPUs to achieve high efficiency and performance gains in computing tasks that require intensive processing and large-scale data handling.

CUDA (Compute Unified Device Architecture) and OpenCL (Open Computing Language) are programming frameworks that enable GPU acceleration in High-Performance Computing (HPC).

CUDA: Developed by NVIDIA, CUDA allows programmers to harness the parallel processing power of NVIDIA GPUs using a specialized programming model. It includes libraries and tools that optimize performance for tasks like scientific simulations and deep learning.

OpenCL: Managed by the Khronos Group, OpenCL is a vendor-neutral standard for heterogeneous computing. It supports various hardware platforms, enabling developers to write programs that execute across different types of processors, including GPUs, CPUs, and more.

Both CUDA and OpenCL simplify the process of leveraging GPU capabilities in HPC applications, offering high-level APIs and tools that enhance computational performance for tasks requiring intensive parallel processing.

When choosing GPUs for HPC (High-Performance Computing) workloads, several factors should be carefully considered:

Compute Capability: Check the GPU’s compute capability, which determines its parallel processing power and suitability for specific HPC tasks. Higher compute capability generally correlates with better performance.

Memory Capacity and Bandwidth: Evaluate the GPU’s onboard memory capacity (VRAM) and memory bandwidth. HPC workloads often require large datasets to be processed quickly, so ample memory capacity and high memory bandwidth are crucial for performance.

CUDA Cores or Stream Processors: The number and efficiency of CUDA cores (NVIDIA GPUs) or stream processors (AMD GPUs) directly impact parallel processing capability. More cores generally mean better performance for highly parallel tasks.

Double Precision Performance (FP64): Some HPC applications require high double-precision floating-point performance. Check the GPU’s FP64 performance if your workload demands precision calculations.

Software and API Support: Consider the availability and optimization of software frameworks and libraries (like CUDA, OpenCL, or specific HPC libraries) for the GPU you are considering. This can significantly impact development and performance.

Power Consumption and Cooling Requirements: GPUs used in HPC often consume considerable power and generate heat. Ensure your infrastructure can handle the power requirements and provide adequate cooling to maintain performance and reliability.

Interconnect and Scalability: If building a GPU cluster for HPC, consider the GPU’s compatibility with high-speed interconnects (like NVLink or InfiniBand) and its scalability in terms of adding more GPUs to the system.

Budget and Total Cost of Ownership (TCO): Determine your budget for GPU acquisition and consider the total cost of ownership, including power consumption, cooling, maintenance, and software licensing.

By carefully evaluating these factors based on your specific HPC workload requirements, you can choose GPUs that offer optimal performance, efficiency, and scalability for your applications.

High Performance: GPUs can process many parallel tasks simultaneously, significantly speeding up computations.

Efficiency: Better performance-per-watt ratio compared to CPUs for certain tasks.

Scalability: Ideal for scaling applications that require heavy computational power.

Flexibility: Suitable for a variety of applications, including AI, deep learning, scientific research, and 3D rendering.

Machine Learning and AI: Training and inference of neural networks.

Data Analytics: Processing large datasets with complex calculations.

Scientific Simulations: Computational chemistry, physics simulations, and bioinformatics.

Rendering and Visualization: 3D rendering, video encoding, and image processing.

Yes, many cloud service providers offer GPU instances that allow you to leverage the power of GPUs without investing in physical hardware. These instances are ideal for scalable, on-demand access to GPU resources for tasks like AI training, data analysis, and more.

GPU virtualisation allows multiple virtual machines (VMs) to share a single GPU or a pool of GPUs. This is achieved through technologies like NVIDIA GRID, which provides virtual GPU (vGPU) solutions, enabling resource sharing while maintaining high performance and flexibility for various workloads.